Target Configuration Reference Application Hardware

Using VS Code

This section explains how to use MLEK reference applications with the Arm CMSIS Solution extension for VS Code.

Install Required Packs

Install the CMSIS-MLEK pack and any required board support packs:

cpackget add ARM::cmsis-mlek

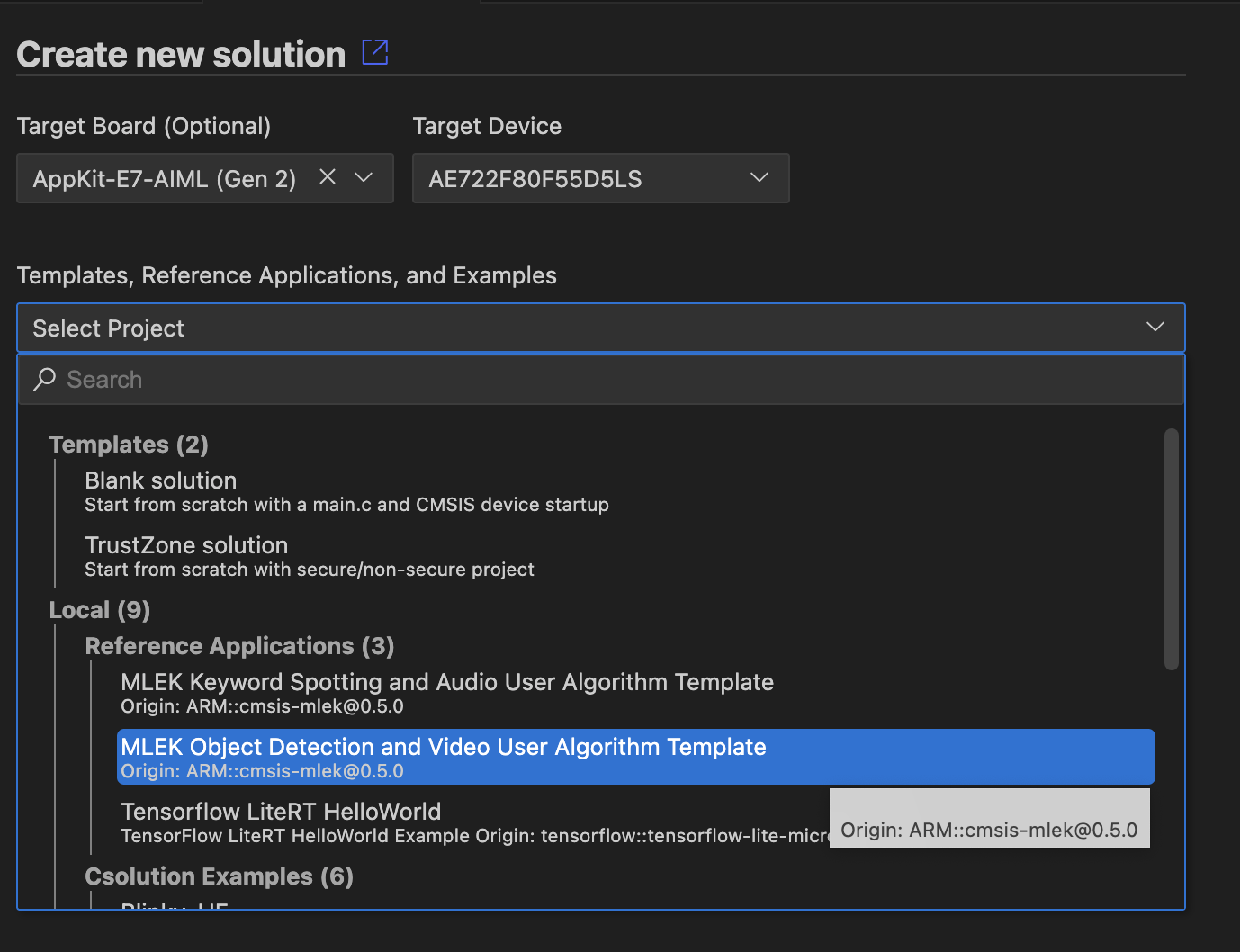

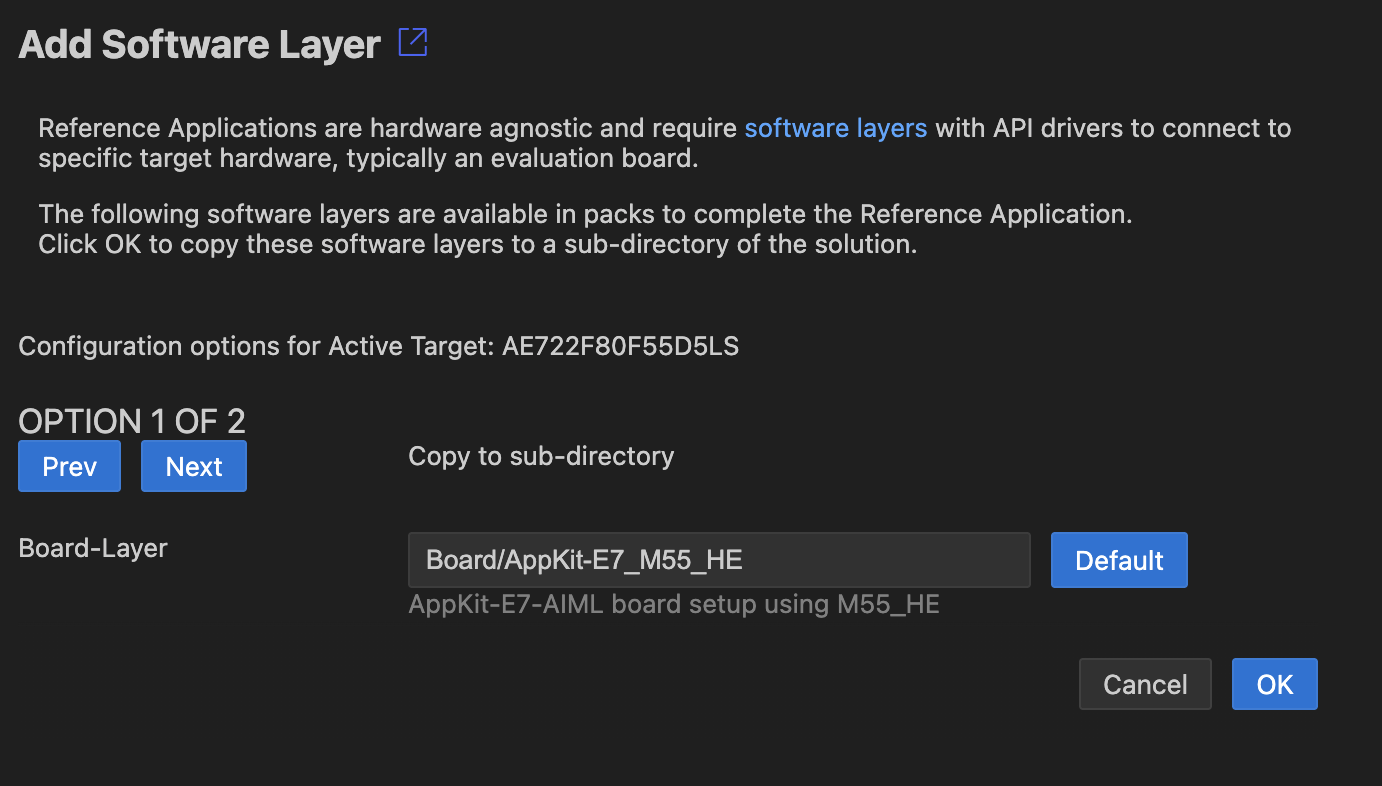

Create New Solution

- Open VS Code and use the Create a new solution dialog

-

Select one of the MLEK reference applications:

-

MLEK Keyword Spotting and Audio User Algorithm Template

-

MLEK Object Detection and Video User Algorithm Template

-

MLEK Generic Inference Runnner. (available in pack version 1.0+)

- Configure the target platform and toolchain:

Build and Run

Refer to the Arm CMSIS CSolution extentions documentation on how to use the CMSIS View. It offers convenient and pre-configured access to build and debug features.

Enable Ethos-U NPU support for your target

To enable NPU support for custom hardware:

- Open the

*.csolution.ymlfile and look for the firsttarget-type:

target-types:

- type: MyCustomBoard

device: STM32U585AIIx # Your target MCU

variables:

...

To the "type" entry add a suffix that identifies NPU model and MAC configuration. e.g.:

- type: MyCustomBoard-U55-128

# or

- type: MyCustomBoard-U85-512

If you do not specify a MAC variant, 256 will be the default.

This will configure the project to include the correct drivers and models for the NPU selected.

Reference Applications

Audio Template: Keyword Spotting

The KWS template demonstrates real-time wake word detection:

Key Features:

- Real-time audio preprocessing (MFCC feature extraction)

- Optimized neural network inference using CMSIS-NN

- Configurable wake word models

- Performance profiling and benchmarking

Getting Started:

- Build and run the template on your target platform

- Speak the wake word (e.g. "Yes" or "Up")

- Observe detection results via UART output or LEDs

- Replace the model with your custom wake word model

Customization Points:

kws/src/kws_model.cpp: Replace with your TensorFlow Lite modelkws/src/audio_preprocessing.cpp: Modify audio preprocessing pipelinekws/config/kws_config.h: Adjust detection thresholds and parameters

Video Template: Object Detection

The object detection template provides real-time computer vision:

Key Features:

- Camera input processing and frame buffering

- Object detection using MobileNet-based models

- Bounding box visualization

- Multi-object detection and classification

Getting Started:

- Build and run the template with camera input

- Point camera at objects for detection

- View detection results on display or via debug output

- Integrate your custom object detection model

Customization Points:

object-detection/src/detection_model.cpp: Replace with your modelobject-detection/src/image_preprocessing.cpp: Modify image preprocessingobject-detection/config/detection_config.h: Adjust detection parameters

Generic Template: Inference Runner

(available in pack version 1.0+)

The generic inference template provides maximum flexibility:

Key Features:

- Framework for any TensorFlow Lite model

- Configurable input/output tensors

- Performance benchmarking utilities

- Extensible architecture for custom applications

Getting Started:

- Replace the example model with your TensorFlow Lite model

- Configure input/output tensor specifications

- Implement custom preprocessing/postprocessing

- Build and test your custom ML application

Customization Points:

inference_runner/Model/: Replace with your TensorFlow Lite model filesinference_runner/src/inference_runner.cpp: Modify inference pipelineinference_runner/config/: Adjust model and application configuration

Performance Optimization

All MLEK templates include built-in performance optimization features:

- CMSIS-NN Integration: Optimized neural network kernels for Cortex-M

- Ethos-U Acceleration: NPU acceleration for supported layers

- Memory Optimization: Efficient memory management and tensor allocation

- Profiling Tools: Built-in timing and resource usage measurements

Next Steps

After successfully running an MLEK template:

- Model Integration: Replace the example model with your trained TensorFlow Lite model

- Application Customization: Modify the application logic for your specific use case

- Performance Tuning: Optimize for your target constraints (memory, power, latency)

- Hardware Deployment: Test on your target hardware platform

- Production Deployment: Integrate into your final product design

For detailed examples and additional resources, refer to the individual template README files and the MLEK documentation.